- The CyberLens Newsletter

- Posts

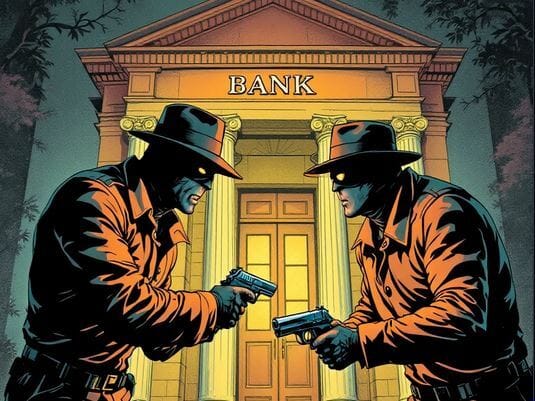

- Hackers Are Now Weaponizing AI Chatbots to Hijack Your Bank Accounts

Hackers Are Now Weaponizing AI Chatbots to Hijack Your Bank Accounts

The Rising of Conversational Cyber-crime and Why Financial Institutions Must Rethink Their AI Security Strategies

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

Interesting Tech Fact:

Did you know that some of the world’s largest banks quietly use “honey accounts” — decoy bank accounts with realistic transaction histories and fake customer data — as part of their cybersecurity defense strategy. These digital traps are designed to lure cyber-criminals and fraud bots into interacting with them, triggering alerts and allowing threat analysts to study attack patterns in real time. By analyzing how attackers attempt to breach these dummy accounts, banks can refine their fraud detection algorithms and preemptively block similar threats before they reach real customers—making deception a powerful tool in modern cyber defense.

Introduction

In the rapidly evolving landscape of cyber threats, a new and alarming trend is emerging—hackers are now leveraging AI-powered chatbots to socially engineer their way into bank accounts. What was once the domain of phishing emails and brute-force attacks is now being replaced by intelligent, context-aware conversations generated by artificial intelligence.

The same chatbots that are transforming customer service and automating business workflows are being weaponized with surgical precision by threat actors. These AI-driven social engineering tools can convincingly impersonate banks, customer support agents, or even family members, conducting real-time manipulation that bypasses traditional fraud detection systems.

Here are exploring the mechanics, implications, and urgent countermeasures surrounding this new cyber frontier—where AI isn’t just defending networks, but attacking them.

The Rise of Conversational Cyber-Crime

While AI chatbots like ChatGPT, Gemini, and open-source LLMs (Large Language Models) are celebrated for their utility and efficiency, cyber-criminals are exploiting their power to create sophisticated scams that mimic legitimate human interaction.

In the past, phishing emails were easy to spot—full of grammar errors, unusual requests, and broken formatting. But today’s AI chatbots generate grammatically perfect, highly convincing content in multiple languages, tailored to the victim’s background, location, and behavioral patterns.

Some of the most recent documented attacks involve chatbots posing as:

Bank customer service agents, asking users to “verify” information.

Credit card fraud teams, urgently requesting 2FA codes or login credentials.

Loan department reps, luring users into installing malicious apps or clicking on spoofed websites.

These bots can run multi-turn conversations—asking questions, answering doubts, building trust—and then striking at the right psychological moment. Unlike static phishing emails, AI chatbots learn and adapt within the session, making them far more dangerous and difficult to detect.

How Hackers Are Building These Malicious Bots

Hackers don’t need to build a language model from scratch. Many rely on open-source or jailbroken versions of existing LLMs, which can be fine-tuned for specific malicious intents using techniques like:

Prompt engineering: Creating input-output examples that train the model to mimic banking terminology, verification workflows, and scripted human interactions.

Data poisoning: Feeding the model with real-world scripts taken from banks’ customer service logs to make it sound authentic.

API exploitation: Integrating the chatbot into messaging apps like WhatsApp, Telegram, Facebook Messenger, or fake websites that mimic online banking portals.

Some AI-as-a-service platforms are also being repackaged and sold on dark web marketplaces, complete with phishing templates and chatbot training guides tailored to financial fraud.

Notably, “fraud-as-a-service” botnet groups are now advertising plug-and-play chatbot systems trained specifically to bypass Know Your Customer (KYC) verifications and deceive users into surrendering sensitive data.

A New Breed of Impersonation and Voice Fraud

In more advanced cases, cyber-criminals are integrating AI chatbots with voice cloning technology. This allows attackers to call a victim and simulate a trusted voice—be it a banker, relative, or colleague—while the AI chatbot responds in real-time through text or voice-to-speech conversion.

For example, a victim might receive a call from someone sounding like their actual bank advisor, who then instructs them to “verify their login attempt” by reading a one-time password (OTP) aloud. That OTP is then used instantly by the attacker to gain access.

AI-powered deepfakes + chatbots = next-gen social engineering. It’s no longer a hypothetical risk; it’s happening in real time.

Why Traditional Fraud Detection Isn’t Enough

Banks and financial institutions have relied heavily on anomaly detection, device fingerprinting, and static fraud indicators to prevent unauthorized access. But AI chatbot attacks are surgical and adaptive, often falling below detection thresholds.

Here’s why:

No malware needed: These attacks are behavioral, not technical, making them nearly invisible to antivirus or endpoint detection tools.

Session hijacking is seamless: The attacker never touches the victim’s device; the user willingly hands over access credentials.

Language & tone mimicry: Chatbots replicate tone, syntax, and communication patterns of real bank representatives or family members.

24/7 automation: Bots can run social engineering attempts at scale, continuously probing multiple users for entry points.

Worse, AI-powered social engineering is nonlinear—a single attack can branch into different approaches depending on how the victim responds, just like a real conversation. This destroys rule-based filters that rely on specific keyword patterns.

Real-World Incidents and Alarming Statistics

In early 2025, a financial services firm in Europe reported a major breach after customers were lured via WhatsApp messages by AI chatbots posing as fraud protection agents. Over $1.2 million was siphoned in under 72 hours.

A joint investigation by Interpol and Europol uncovered a Telegram botnet ring trained on chatbot APIs with custom banking script playbooks, targeting over 50,000 users globally.

In a dark web forum called "SynthexCorp", members discussed how to fine-tune LLMs to predict victim behavior using regional idioms and time-zone-based response delays for realism.

What Financial Institutions Must Do Now

This is not just an IT problem—it’s a crisis of trust. The human layer is now the most vulnerable layer, and attackers are leveraging psychological AI warfare.

Here’s how financial institutions and cyber professionals must respond:

1. Adopt AI-Based Fraud Detection

Deploy counter-AI systems capable of identifying abnormal conversation patterns or flagging inconsistencies that are invisible to traditional fraud systems. These include:

Conversational fingerprinting

Real-time sentiment analysis

Bot interaction profiling

2. Implement Multi-Layered Identity Verification

Beyond 2FA, adopt passive bio-metric indicators (typing speed, device tilt, geolocation) and behavioral analytics to verify real users. The fewer friction points attackers can exploit, the better.

3. Integrate Human + AI Response Systems

Leverage AI-powered fraud detection systems, but augment them with human oversight, especially for high-value transactions. Only humans can assess nuance in questionable behavior—AI alone is not enough.

4. Educate Customers Aggressively

Launch aggressive awareness campaigns highlighting how AI chatbot scams work. Use real examples. Train customers to never trust unsolicited requests for banking information, regardless of how professional the interaction sounds.

5. Collaborate with Regulatory Bodies

Work with cybersecurity regulators and AI watchdogs to develop standardized frameworks for AI chatbot misuse detection, especially in the financial services sector. This includes mandatory logging, traceable AI interactions, and red team audits.

What’s Next: The AI Arms Race in Cybersecurity

As AI tools become more advanced, the line between attacker and defender will blur even further. Chatbots trained to steal data will be countered by AI watchdogs designed to sniff out deceptive patterns. In this evolving arms race, whichever side adopts smarter, faster AI wins.

For financial institutions, the urgency is clear: this is not science fiction. It’s a new attack vector being exploited right now. If your security playbook doesn’t address AI-driven threats, it’s already outdated.

Cybersecurity professionals must take the lead in creating a future where AI defends trust instead of eroding it.

CyberLens Conclusion

The use of AI chatbots by hackers is a turning point in modern cybercrime. It's no longer about brute force or malware—it's about psychology, speed, and persuasion at machine scale. Defending against these threats requires a new mindset, one that combines technical defense with behavioral intelligence and aggressive public awareness. The future of finance will be defined not just by innovation, but by how well we can protect the conversations that power it.